In the first part of this post, I started to answer a reader’s question about what information you need before you estimate a project and build a schedule. The reader, Wayne, said that he didn’t “get a solid sense of the relative timing of the activities (especially the requirements activity),” because it wasn’t clear how much information you need to know about the project before you get started. One thing that Jenny and I come back to again and again is that there is no single “best,” one-size-fits-all way of running a project. A schedule is a great tool for planning a project, but you have to actually take a close look at what you know about your project before you start building a schedule. And you need to come to grips with the reality that what you know today could easily change. Even if you have a perfect understanding of today’s needs (which, in reality, never actually happens), that doesn’t mean the world won’t change and your users won’t need different software tomorrow.

Don’t get me wrong: I do think schedules are great tools for planning. You can use a schedule to organize the effort and manage your project’s dependencies. And you can use it to communicate with your team. A schedule’s a great way to get a lot of difficult-to-manage information down on paper so you can to see it all in one place. There have been many times over the years when it was only after I had a schedule all sorted out that I could see the project clearly. That’s when I could realize, “Hey, we can save time by doing these two things concurrently,†or, “Uh-oh, we’ve got the riskiest stuff on our critical path. That’s not a good idea!†That’s how a schedule can be a great tool for understanding your work.

But really, while those are important aspects of a project schedule, they aren’t the main way that we use schedules.

Think about what happens when you give a schedule to someone. If that person’s on your team, they’ll probably groan – maybe not out loud to you if you’re the boss, but we all groan a little inside when someone hands us a schedule that we need to meet. Just like a contractor who doesn’t really care whether the renovation on your house takes six weeks or eight weeks, your team doesn’t really care how long their work takes, as long as they have enough time to do it and don’t have to work nights and weekends to scramble to meet an unrealistic deadline. (Obviously, teams take pride in working quickly, but let’s be realistic here.)

But if you show a schedule to someone who’s not on your team, that schedule makes them happy. They’re generally relieved to see it, because now they know more about when you’re delivering the software. But it’s not the whole schedule they care about. Most of the time, when you hand a schedule to a client, a user or a manager at your company, they see one thing: the deadline. Which you just committed to.

And that’s the real nature of the schedule. Your project’s schedule contains a list of everything that you know you have to do – and it’s your way of telling the rest of the world that you’re committed to doing every single item on that list by a certain date. A schedule isn’t really about getting technical input from your team, or about planning out the work. Those things are nice side-effects of building a schedule, but there are tools that you can use to do those things that don’t involve committing to a date.

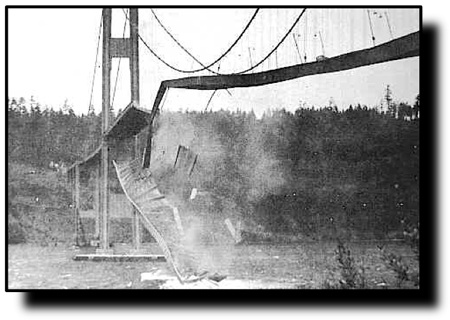

No, a schedule, at its core, is really about making commitments to other people. Schedules aren’t just there to be followed. They’re there to represent the real-life commitments that you made to other people. If you meet every commitment you made but go entirely off plan, your project will still be successful. But if you “work the plan” in perfect, excruciating detail, but you still manage to break the commitments that you made – even if it’s because of changes you couldn’t control – your project will be a failure. And that’s the power a schedule brings to your project. Like any tool, it can be used for good or malice.

That’s the perilous aspect of building a schedule: as soon as you commit yourself to it, you’ve introduced potential negative consequences that weren’t there before you put dates down on paper. (No wonder programmers are so reluctant to give estimates!)

Project schedules… not for the commitment-phobic

Jenny and I do a lot of speaking, and when we do we often find ourselves bringing up the idea that the point of any document is to communicate. Let’s say you’re my client, and we’ve got a requirements specification for a piece of software that I’m building for you. The specification itself, the words printed on paper, that’s not important. What’s important is that what’s in my head matches what’s in your head, that the software I’m planning on building is as close as possible as the software that you’re expecting me to deliver. It just so happens that a software requirements specification is a great tool for making sure that what’s in your head matches what’s in mine.

But the document does something else, too. Once we both have the same understanding, writing it down in a specification and agreeing on it means that we both made a commitment. I made a commitment to build the software that’s described in the document. But just as importantly, you’re making a commitment to me: that if I deliver software that meets that specification, you’ll accept it as complete. If you have changes, that’s fine. We just need to update the specification so that it has those changes.

(Oh, and just in case I didn’t make it clear, that “specification” could be a stack of index cards with user stories written on them, and we could make those updates every week or even every day, if that’s what the business needs.)

A schedule works the same way. If we write down and agree on a schedule, that means I promised to give you a certain set of deliverables on certain dates, and you promised to accept them.

At this point, someone who’s studied for the PMP exam might bring up “progressive elaboration,†which reflects the idea that a team can’t know everything about the project they’re working on at the very beginning. We don’t know everything about how the software will be built and tested when we’re still working on the design, and we don’t know everything about the design when we’re working on requirements. When we get to the next checkpoint we may realize that our earlier estimates were wrong, or that our whole approach was wrong. If we’re lucky, we’ve put together a team that accepts this as a basic reality, and plans all work in iterations that deliver complete, working software at the end of each iteration. (And yes, if you’re studying for the PMP exam, you do need to know about iteration!)

But can you see how, even with all of that, it still revolves around commitments?

That’s my point. A schedule is first and foremost a tool for managing your commitments, and only after that is it a tool for actually planning the work. (For a distant third, it’s a record of how the project turned out that you can use to generate metrics.) But the big point is that the schedule doesn’t commit you. Your commitments commit you. The schedule just keeps your commitments on paper in one place.

Now, while all of this may sound negative, it’s not. A good software team that can meet their commitments gains trust from their users, clients and stakeholders. If you’ve got a reputation for making commitments and sticking to them, you’ve got something really powerful. You’ve got the trust of the people you depend on to drive your project forward. And that’s where the schedule can be a really positive thing. To your users, it represents stable software they can depend on. To your team, it represents normal days without crazy pressure, without working late nights or weekends. When you take your commitments seriously, your schedule represents the truth about your project at any given point, and people come to depend on it.

I want to finish off by excerpting a section from “Applied Software Project Management,†because I think it cuts to the core of the point I’m trying to make about schedules and commitments, and how you can use them effectively.

Use the Schedule to Manage Commitments

A project schedule represents a commitment by the team to perform a set of tasks. When the project manager adds a task to the schedule and it’s agreed upon by the team, the person who is assigned to that task now has a commitment to complete it by the task’s due date. Senior managers feel that they can depend on the schedule as an accurate forecast of how the project is going to go—when the schedule slips, it’s treated as an exception, and an explanation is required. For this reason, the schedule is a powerful tool for commitment management .

One common complaint among project managers attempting to improve the way their organizations build software is that the changes they make don’t take root. Typically, the project manager will call a meeting to announce a new tool or technique—he may ask the team to start performing code reviews, for example—only to find that the team does not actually perform the reviews when building the software. Things that seem like a good idea in a meeting often fail to “stick” in practice.

This is where the schedule is a very valuable tool. By adding tasks to the schedule that represent the actual improvements that need to be made—for example, by scheduling all of the review meetings—the project manager has a much better chance of gaining a real commitment from the team.

If the team does not feel comfortable making a commitment to the new practice, the disagreement will come up during the schedule review. Typically, when a project team member disagrees with implementing a new tool or technique, he does not bring it up during the meeting where it’s introduced. Instead, he will simply fail to use it, and build the software as he has on past projects. This is usually justified with an explanation that there isn’t enough time, and that implementing the change will make the task late.

By explicitly adding a task to the schedule, the project manager ensures that enough time is built in to account for the change. This cements the change into the project plan, and makes it clear up front that the team is expected to adopt the practice. More importantly, it is a good consensus-building tool because it allows team members to bring up the new practice when they review the project plan. By putting the change out in the open, the project manager encourages real discussion of it, and is given a chance to explain the reason for the practice during the review meetings. If the practice makes it past the review, then the project manager ends up with a real commitment from the team to adopt the new practice.

— Stellman & Greene, Applied Software Project Management, chapter 4 (O’Reilly, 2005)

I hope that this helps explain how we think you can use a schedule can be used to help you and your team manage your projects more effectively to build better software.